Overview

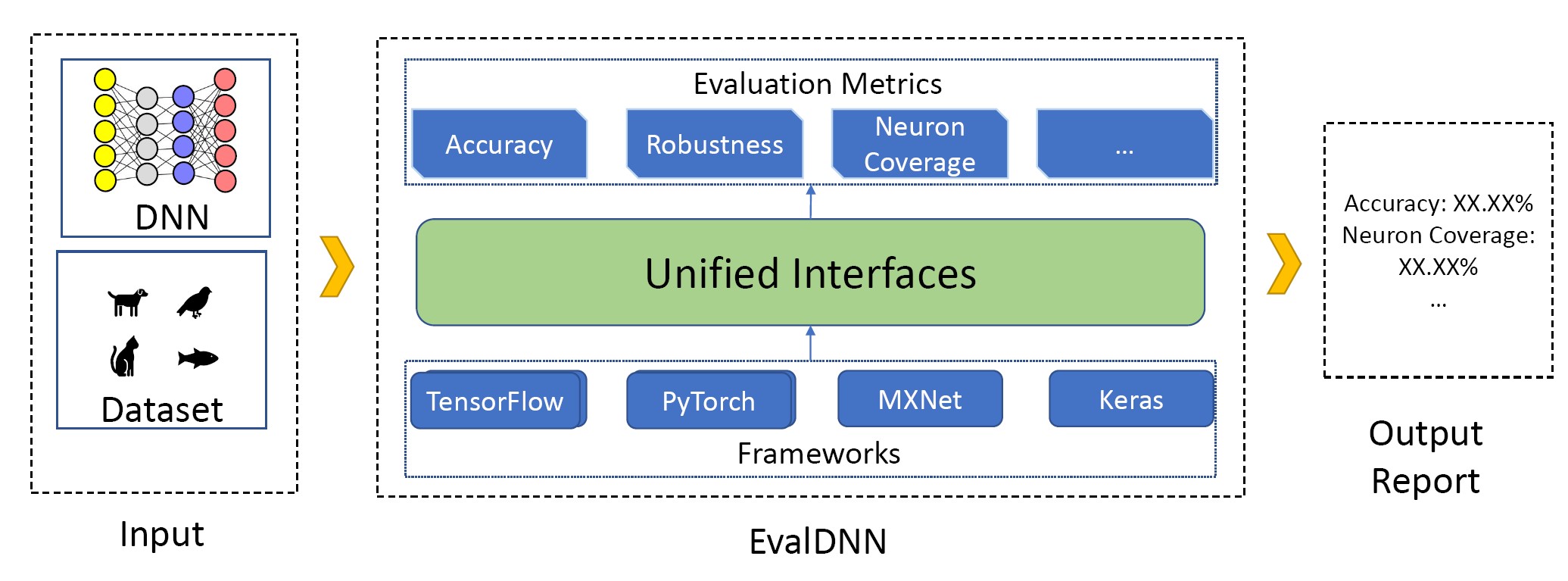

EvalDNN is an open-source toolbox for model evaluation of deep learning systems, supporting multiple frameworks and metrics.

Author: Yongqiang Tian*, Zhihua Zeng*, Ming Wen, Yepang Liu, Tzu-yang Kuo, and Shing-Chi, Cheung.

*The first two author contribute equally.

This project is mainly supported by Microsoft Asia Cloud Research Software Fellow Award 2019.

Demo video

Supported Frameworks and Metrics

EvalDNN supports the model based on following frameworks:

- TensorFlow

- PyTorch

- Keras

- MXNet

EvalDNN supports the model based on following metrics:

- Top-K accuracy

- Neuron Coverage

- Robustness

Usage of EvalDNN

Please check the documents in EvalDNN GitHub here